Consumer Insights from Online Reviews Powered by Generative AI

Seamlessly integrate review analytics into your marketing, ecommerce, and product processes, driving strategic decision-making with high-quality data and real-time analysis.

The World's Leading Brands

Are Using Revuze Generative AI For Consumer Insights

What Revuze’s Generative AI does

Automates consumer research

Revuze automatically collects unstructured data from multiple sources — such as eCommerce reviews, surveys and user-generated content — and then classifies, cleans and organizes it into actionable insights that describe consumer needs across different categories in a granular way.

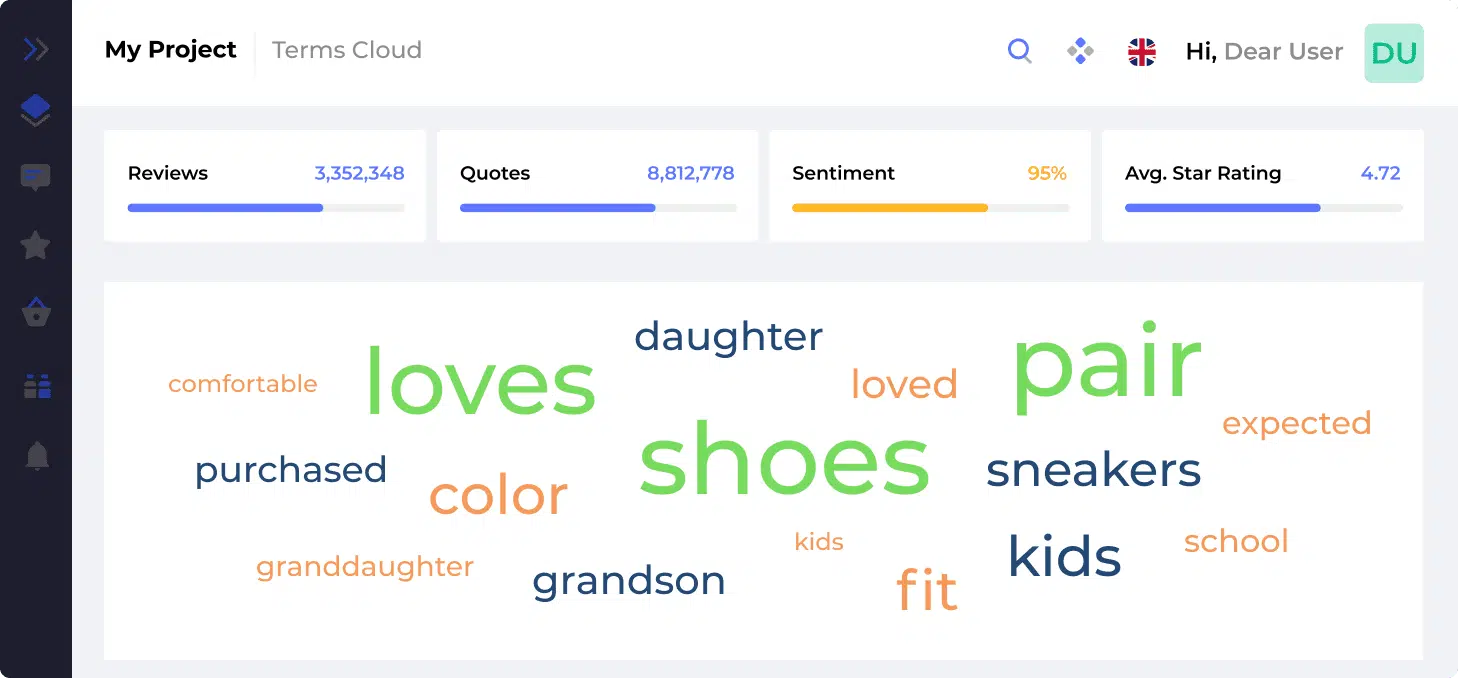

Identifies the most relevant topics

Revuze’s machine learning algorithms discover topics of conversation for each product category and build a unique taxonomy for each of them, without the need for any human interaction.

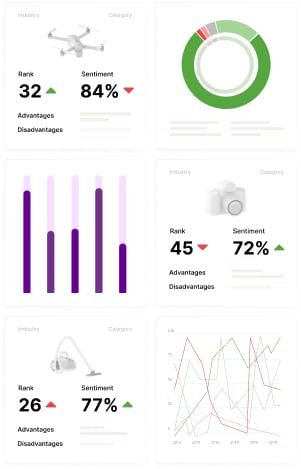

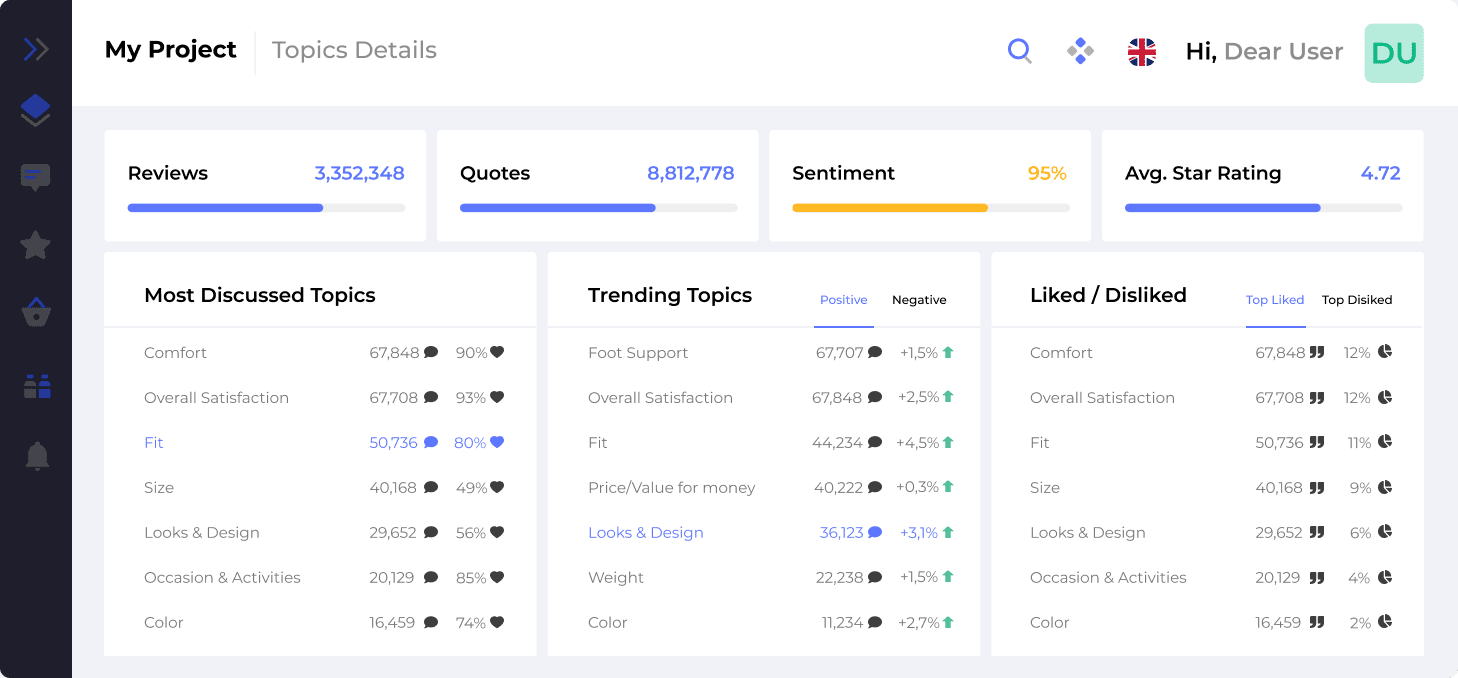

Detects customer sentiment

Revuze’s contextual intelligence understands topics, sentiment and context with no manual keyword definition, delivering actionable insights on customer satisfaction with exceptional accuracy.

A better way to get the insights you need

Hours instead of months

Traditional market reports take months to complete — by the time you receive them, they’re already outdated. With Revuze, you get the competitive insights you need in a matter of hours.

The insights you receive easy-to-understand and immediately usable.

Classified, clean and granular data

Revuze automatically collects, categorizes, identifies, and extracts trends and topics from unstructured data – understanding context with exceptionally high precision and delivering truly actionable business insights.

Revuze’s contextual intelligence understands topics and sentiment, regardless of the actual words customers use. With no manual keyword definition.

Easy onboarding process

No need to spend time defining keywords, identifying key phrases and hiring expensive analysts. Revuze’s self-learning analytics engine – powered by a continual collection of customer insights alongside customer satisfaction – determines the sentiment towards any brand with great accuracy all without human interaction.

Typical delivery duration of only 3 to 6 weeks: